California-based Stanford University’s Center for Research on Foundation Models (CRFM) has partnered with Arabic AI, a UAE-based enterprise AI technology provider, to launch HELM Arabic. This new leaderboard offers a transparent and reproducible framework for evaluating Large Language Models (LLMs) on Arabic language benchmarks.

Bridging the Evaluation Gap

The initiative addresses a significant gap in the AI landscape. Despite being spoken by over 400 million people, the Arabic language has been historically underserved by AI models and lacks the rigorous assessment frameworks available for English and other major languages.

By providing a transparent and reproducible evaluation methodology, HELM Arabic enables an objective comparison of performance across both open-weight and commercial models. This fosters greater innovation and accountability within the rapidly growing Arabic AI ecosystem.

A Transparent and Reproducible Framework

The project extends Stanford’s well-regarded HELM (Holistic Evaluation of Language Models) framework, an open-source platform known for its comprehensive model assessments. The Arabic version incorporates seven established benchmarks widely used by the research community to test model capabilities.

These benchmarks include AlGhafa (multiple choice), Arabic EXAMS (high school questions), MadinahQA (language and grammar), AraTrust (region-specific safety evaluation), ALRAGE (passage-based question answering), and translated versions of MMLU.

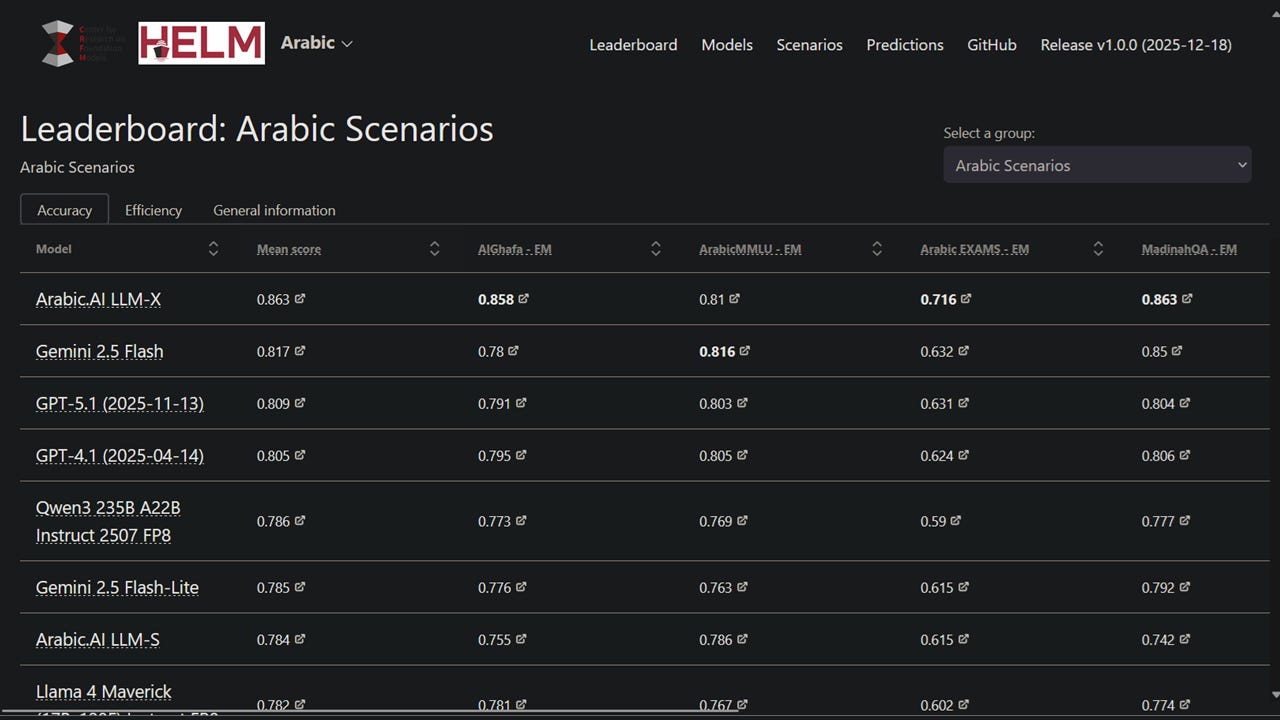

Initial Leaderboard Rankings

In the initial rankings, Arabic AI’s flagship model, Arabic.AI LLM-X (also known as Pronoia), secured the top position with the highest mean score across the benchmarks.

Among open-weights multilingual models, Qwen3 235B emerged as the best performer. Notably, four open-weights models ranked in the top 10 positions, including Llama 4 Maverick, Qwen3-Next 80B, and DeepSeek v3.1, highlighting their strong relative performance. The leaderboard provides full transparency into all LLM requests and responses, allowing for reproducible results.

MENA’s Growing Role in AI Benchmarking

The launch of HELM Arabic is the latest in a series of initiatives from the MENA region aimed at advancing Arabic AI evaluation. Abu Dhabi’s AI ecosystem has been at the forefront of this effort, contributing significantly to the tools and benchmarks available.

Recent developments include the Technology Innovation Institute’s (TII) release of 3LM, a benchmark for evaluating STEM reasoning, and the launch of the Arabic Leaderboards Space on Hugging Face by Inception and Mohamed bin Zayed University of Artificial Intelligence (MBZUAI).

About Arabic AI

Arabic AI is a Middle East-based enterprise AI technology provider focused on developing advanced large language models and AI solutions tailored for the Arabic language and the wider region. Its flagship model is the Arabic.AI LLM-X, also known as Pronoia.

Source: Middle East AI News